在Keras中实现One-Shot学习任务通常涉及使用Siamese神经网络架构。Siamese神经网络是一种双塔结构的神经网络,其中两个相同的子网络共享参数,用来比较两个输入之间的相似性。

以下是在Keras中实现One-Shot学习任务的一般步骤:

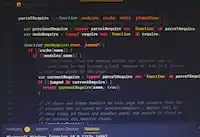

- 定义Siamese神经网络的基本结构:

from keras.models import Model

from keras.layers import Input, Conv2D, Flatten, Dense

def create_siamese_network(input_shape):

input_layer = Input(shape=input_shape)

conv1 = Conv2D(32, (3, 3), activation='relu')(input_layer)

# Add more convolutional layers if needed

flattened = Flatten()(conv1)

dense1 = Dense(128, activation='relu')(flattened)

model = Model(inputs=input_layer, outputs=dense1)

return model

- 创建Siamese网络的实例,并共享参数:

input_shape = (28, 28, 1)

siamese_network = create_siamese_network(input_shape)

input_a = Input(shape=input_shape)

input_b = Input(shape=input_shape)

output_a = siamese_network(input_a)

output_b = siamese_network(input_b)

- 编写损失函数来计算两个输入之间的相似性:

from keras import backend as K

def euclidean_distance(vects):

x, y = vects

sum_square = K.sum(K.square(x - y), axis=1, keepdims=True)

return K.sqrt(K.maximum(sum_square, K.epsilon()))

def eucl_dist_output_shape(shapes):

shape1, shape2 = shapes

return (shape1[0], 1)

distance = Lambda(euclidean_distance, output_shape=eucl_dist_output_shape)([output_a, output_b])

- 编译模型并训练:

from keras.models import Model

from keras.layers import Lambda

from keras.optimizers import Adam

siamese_model = Model(inputs=[input_a, input_b], outputs=distance)

siamese_model.compile(loss='binary_crossentropy', optimizer=Adam(), metrics=['accuracy'])

siamese_model.fit([X_train_pairs[:, 0], X_train_pairs[:, 1]], y_train, batch_size=128, epochs=10)

在训练过程中,需要准备好包含正样本和负样本对的训练数据,其中正样本对表示相同类别的两个样本,负样本对表示不同类别的两个样本。在这里,X_train_pairs是输入的样本对,y_train是对应的标签。