本篇内容主要讲解“helm的部署和简单使用”,感兴趣的朋友不妨来看看。本文介绍的方法操作简单快捷,实用性强。下面就让小编来带大家学习“helm的部署和简单使用”吧!

Helm通过软件打包的形式,支持发布的版本管理和控制,很大程度上简化了Kubernetes应用部署和管理的复杂性。

随着业务容器化与向微服务架构转变,通过分解巨大的单体应用为多个服务的方式,分解了单体应用的复杂性,使每个微服务都可以独立部署和扩展,实现了敏捷开发和快速迭代和部署。但任何事情都有两面性,虽然微服务给我们带来了很多便利,但由于应用被拆分成多个组件,导致服务数量大幅增加,对于Kubernetest编排来说,每个组件有自己的资源文件,并且可以独立的部署与伸缩,这给采用Kubernetes做应用编排带来了诸多挑战:

管理、编辑与更新大量的K8s配置文件

部署一个含有大量配置文件的复杂K8s应用

分享和复用K8s配置和应用

参数化配置模板支持多个环境

管理应用的发布:回滚、diff和查看发布历史

控制一个部署周期中的某一些环节

发布后的验证

而Helm恰好可以帮助我们解决上面问题。

Helm把Kubernetes资源(比如deployments、services或 ingress等) 打包到一个chart中,而chart被保存到chart仓库。通过chart仓库来存储和分享chart。Helm使发布可配置,支持发布应用配置的版本管理,简化了Kubernetes部署应用的版本控制、打包、发布、删除、更新等操作。

本文简单介绍了Helm的用途、架构、安装和使用。

用途

做为Kubernetes的一个包管理工具,Helm具有如下功能:

创建新的chart

chart打包成tgz格式

上传chart到chart仓库或从仓库中下载chart

在Kubernetes集群中安装或卸载chart

管理用Helm安装的chart的发布周期

Helm有三个重要概念:

chart:包含了创建Kubernetes的一个应用实例的必要信息

config:包含了应用发布配置信息

release:是一个chart及其配置的一个运行实例

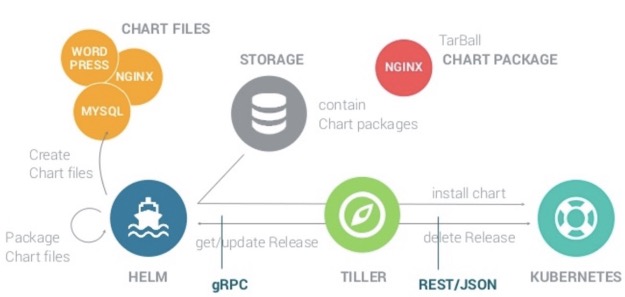

架构

组件

Helm有以下两个组成部分:

Helm Client是用户命令行工具,其主要负责如下:

本地chart开发

仓库管理

与Tiller sever交互

发送预安装的chart

查询release信息

要求升级或卸载已存在的release

Tiller server是一个部署在Kubernetes集群内部的server,其与Helm client、Kubernetes API server进行交互,主要负责如下:

监听来自Helm client的请求

通过chart及其配置构建一次发布

安装chart到Kubernetes集群,并跟踪随后的发布

通过与Kubernetes交互升级或卸载chart

简单的说,client管理charts,而server管理发布release。

实现

Helm client

Helm client采用go语言编写,采用

gRPC协议与Tiller server交互。Helm server

Tiller server也同样采用go语言编写,提供了gRPC server与helm client进行交互,利用Kubernetes client 库与Kubernetes进行通信,当前库使用了

REST+JSON格式。Tiller server 没有自己的数据库,目前使用Kubernetes的

ConfigMaps存储相关信息

说明:配置文件尽可能使用YAM格式

安装

如果与我的情况不同,请阅读官方的quick guide,了安装的核心流程和多种情况。

Helm Release地址

前置条件

kubernetes集群

了解kubernetes的Context安全机制

下载helm的安装包

wget https://storage.googleapis.com/kubernetes-helm/helm-v2.11.0-linux-amd64.tar.gz

配置ServiceAccount和规则

我的环境使用了RBAC(Role-Based Access Control )的授权方式,需要先配置ServiceAccount和规则,然后再安装helm。官方配置参考Role-based Access Control文档。

配置helm全集群权限

权限管理yml:

apiVersion: v1kind: ServiceAccountmetadata: name: tiller namespace: kube-system---apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRoleBindingmetadata: name: tillerroleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: cluster-adminsubjects: - kind: ServiceAccount name: tiller namespace: kube-system

cluster-admin是kubernetes默认创建的角色。不需要重新定义。

安装helm:

$ kubectl create -f rbac-config.yamlserviceaccount "tiller" createdclusterrolebinding "tiller" created$ helm init --service-account tiller运行结果:

$HELM_HOME has been configured at /root/.helm.Tiller (the Helm server-side component) has been installed into your Kubernetes Cluster.Please note: by default, Tiller is deployed with an insecure 'allow unauthenticated users' policy.To prevent this, run `helm init` with the --tiller-tls-verify flag.For more information on securing your installation see: https://docs.helm.sh/using_helm/#securing-your-helm-installationHappy Helming!实验环境建议使用本方式安装,然后安装ingress-nginx等系统组件。

配置helm在一个namespace,管理另一个namespace

配置helm 安装在helm-system namespace,允许Tiller发布应用到kube-public namespace。

创建Tiller安装namespace 和 ServiceAccount

创建helm-system namespace,使用命令kubectl create namespace helm-system

定义ServiceAccount

---kind: ServiceAccountapiVersion: v1metadata: name: tiller namespace: helm-systemTiller管理namespace的角色和权限配置

创建一个Role,拥有namespace kube-public的所有权限。将Tiller的ServiceAccount绑定到这个角色上,允许Tiller 管理kube-public namespace 所有的资源。

---kind: RoleapiVersion: rbac.authorization.k8s.io/v1metadata: name: tiller-manager namespace: kube-publicrules:- apiGroups: ["", "extensions", "apps"] resources: ["*"] verbs: ["*"]---kind: RoleBindingapiVersion: rbac.authorization.k8s.io/v1metadata: name: tiller-binding namespace: kube-publicsubjects:- kind: ServiceAccount name: tiller namespace: helm-systemroleRef: kind: Role name: tiller-manager apiGroup: rbac.authorization.k8s.ioTiller内部的Release信息管理

Helm中的Release信息存储在Tiller安装的namespace中的ConfigMap,即helm-system,需要允许Tiller操作helm-system的ConfigMap。所以创建Role helm-system.tiller-manager,并绑定到ServiceAccounthelm-system.tiller

---kind: RoleapiVersion: rbac.authorization.k8s.io/v1metadata: namespace: helm-system name: tiller-managerrules:- apiGroups: ["", "extensions", "apps"] resources: ["configmaps"] verbs: ["*"]---kind: RoleBindingapiVersion: rbac.authorization.k8s.io/v1metadata: name: tiller-binding namespace: helm-systemsubjects:- kind: ServiceAccount name: tiller namespace: helm-systemroleRef: kind: Role name: tiller-manager apiGroup: rbac.authorization.k8s.ioinit helm

使用命令`helm init --service-account tiller --tiller-namespace helm-system`安装helm。helm init 参数说明:

--service-account:指定helm Tiller的ServiceAccount,对于启用了kubernetesRBAC的集群适用。--tiller-namespace:将helm 安装到指定的namespace中;--tiller-image:指定helm镜像--kube-context:将helm Tiller安装到特定的kubernetes集群中;

第一次运行出现问题:

[root@kuber24 helm]# helm init --service-account tiller --tiller-namespace helm-systemCreating /root/.helmCreating /root/.helm/repositoryCreating /root/.helm/repository/cacheCreating /root/.helm/repository/localCreating /root/.helm/pluginsCreating /root/.helm/startersCreating /root/.helm/cache/archiveCreating /root/.helm/repository/repositories.yamlAdding stable repo with URL: https://kubernetes-charts.storage.googleapis.comError: Looks like "https://kubernetes-charts.storage.googleapis.com" is not a valid chart repository or cannot be reached: Get https://kubernetes-charts.storage.googleapis.com/index.yaml: EOF这个是由于google的都被墙了,修改Hosts,指定storage.googleapis.com对应的课访问的IP即可。最新的国内可访问google的Hosts配置见github项目googlehosts/hosts的hosts/hosts-files/hosts文件。

再次运行init helm命令,成功安装。

[root@kuber24 helm]# helm init --service-account tiller --tiller-namespace helm-systemCreating /root/.helm/repository/repositories.yamlAdding stable repo with URL: https://kubernetes-charts.storage.googleapis.comAdding local repo with URL: http://127.0.0.1:8879/charts$HELM_HOME has been configured at /root/.helm.Tiller (the Helm server-side component) has been installed into your Kubernetes Cluster.Please note: by default, Tiller is deployed with an insecure 'allow unauthenticated users' policy.To prevent this, run `helm init` with the --tiller-tls-verify flag.For more information on securing your installation see: https://docs.helm.sh/using_helm/#securing-your-helm-installationHappy Helming!查看Tiller的Pod状态时,发现Pod出现错误ImagePullBackOff,如下:

[root@kuber24 resources]# kubectl get pods --all-namespaces|grep tillerhelm-system tiller-deploy-cdcd5dcb5-fqm57 0/1 ImagePullBackOff 0 13m查看pod的详细信息kubectl describe pod tiller-deploy-cdcd5dcb5-fqm57 -n helm-system,发现Pod依赖镜像gcr.io/kubernetes-helm/tiller:v2.11.0。

查询docker hub上是否有人复制过改镜像,如图:

[root@kuber24 ~]# docker search tiller:v2.11.0INDEX NAME DESCRIPTION STARS OFFICIAL AUTOMATEDdocker.io docker.io/jay1991115/tiller gcr.io/kubernetes-helm/tiller:v2.11.0 1 [OK]docker.io docker.io/luyx30/tiller tiller:v2.11.0 1 [OK]docker.io docker.io/1017746640/tiller FROM gcr.io/kubernetes-helm/tiller:v2.11.0 0 [OK]docker.io docker.io/724399396/tiller gcr.io/kubernetes-helm/tiller:v2.11.0-rc.2... 0 [OK]docker.io docker.io/fengzos/tiller gcr.io/kubernetes-helm/tiller:v2.11.0 0 [OK]docker.io docker.io/imwower/tiller tiller from gcr.io/kubernetes-helm/tiller:... 0 [OK]docker.io docker.io/xiaotech/tiller FROM gcr.io/kubernetes-helm/tiller:v2.11.0 0 [OK]docker.io docker.io/yumingc/tiller tiller:v2.11.0 0 [OK]docker.io docker.io/zhangc476/tiller gcr.io/kubernetes-helm/tiller/kubernetes-h... 0 [OK]同样使用hub.docker.com上mirrorgooglecontainers加速的google镜像,然后改镜像的名字。每个Node节点都需要安装。

安装问题

镜像问题

镜像下载不下来:使用他人同步到docker hub上面的镜像;使用docker search $NAME:$VERSION

安装helm提示repo连接不上

使用Hosts翻墙实现。

下载Chart问题

问题提示:

[root@kuber24 ~]# helm install nginx --tiller-namespace helm-system --namespace kube-publicError: failed to download "nginx" (hint: running `helm repo update` may help)使用helm repo update 后,并没有解决问题。

如下:

[root@kuber24 ~]# helm install nginx --tiller-namespace helm-system --namespace kube-publicError: failed to download "nginx" (hint: running `helm repo update` may help)[root@kuber24 ~]# helm repo updateHang tight while we grab the latest from your chart repositories......Skip local chart repository...Successfully got an update from the "stable" chart repositoryUpdate Complete. ⎈ Happy Helming!⎈[root@kuber24 ~]# helm install nginx --tiller-namespace helm-system --namespace kube-publicError: failed to download "nginx" (hint: running `helm repo update` may help)可能的原因:

没有nginx这个chart:使用

helm search nginx查询nginx chart信息。网络连接问题,下载不了。这种情况下,等待一定超时后,helm会提示。

使用

添加常见的repo

添加aliyun, github 和官方incubator charts repository。

helm add repo gitlab https://charts.gitlab.io/helm repo add aliyun https://kubernetes.oss-cn-hangzhou.aliyuncs.com/chartshelm repo add incubator https://kubernetes-charts-incubator.storage.googleapis.com/日常使用

本小结的$NAME表示helm的repo/chart_name。

查询charts:

helm search $NAME查看release的列表:

helm ls [--tiller-namespace $TILLER_NAMESPACE]查询package 信息:

helm inspect $NAME查询package支持的选项:

helm inspect values $NAME部署chart:

helm install $NAME [--tiller-namespace $TILLER_NAMESPACE] [--namespace $CHART_DEKPLOY_NAMESPACE]删除release:

helm delete $RELEASE_NAME [--purge] [--tiller-namespace $TILLER_NAMESPACE]更新:

helm upgrade --set $PARAM_NAME=$PARAM_VALUE $RELEASE_NAME $NAME [--tiller-namespace $TILLER_NAMESPACE]回滚:

helm rollback $RELEASE_NAME $REVERSION [--tiller-namespace $TILLER_NAMESPACE]

删除release时,不使用

--purge参数,会仅撤销pod部署,不会删除release的基本信息,不能release同名的chart。

部署RELEASE

部署mysql时,查询参数并配置相应的参数。

查询可配置的参数:

[root@kuber24 charts]# helm inspect values aliyun/mysql## mysql image version## ref: https://hub.docker.com/r/library/mysql/tags/##image: "mysql"imageTag: "5.7.14"## Specify password for root user#### Default: random 10 character string# mysqlRootPassword: testing## Create a database user### mysqlUser:# mysqlPassword:## Allow unauthenticated access, uncomment to enable### mysqlAllowEmptyPassword: true## Create a database### mysqlDatabase:## Specify an imagePullPolicy (Required)## It's recommended to change this to 'Always' if the image tag is 'latest'## ref: http://kubernetes.io/docs/user-guide/images/#updating-images##imagePullPolicy: IfNotPresentlivenessProbe: initialDelaySeconds: 30 periodSeconds: 10 timeoutSeconds: 5 successThreshold: 1 failureThreshold: 3readinessProbe: initialDelaySeconds: 5 periodSeconds: 10 timeoutSeconds: 1 successThreshold: 1 failureThreshold: 3## Persist data to a persistent volumepersistence: enabled: true ## database data Persistent Volume Storage Class ## If defined, storageClassName: <storageClass> ## If set to "-", storageClassName: "", which disables dynamic provisioning ## If undefined (the default) or set to null, no storageClassName spec is ## set, choosing the default provisioner. (gp2 on AWS, standard on ## GKE, AWS & OpenStack) ## # storageClass: "-" accessMode: ReadWriteOnce size: 8Gi## Configure resource requests and limits## ref: http://kubernetes.io/docs/user-guide/compute-resources/##resources: requests: memory: 256Mi cpu: 100m# Custom mysql configuration files used to override default mysql settingsconfigurationFiles:# mysql.cnf: |-# [mysqld]# skip-name-resolve## Configure the service## ref: http://kubernetes.io/docs/user-guide/services/service: ## Specify a service type ## ref: https://kubernetes.io/docs/concepts/services-networking/service/#publishing-services---service-types type: ClusterIP port: 3306 # nodePort: 32000例如我们需要配置mysql的root密码,那么可以直接使用--set参数设置选项,例如roo密码设置:--set mysqlRootPassword=hgfgood。

通过mysql选项的说明中persistence参数,可以看出mysql 需要持久化存储,所以需要给kubernetes配置持久化存储卷PV。

创建PV:

[root@kuber24 resources]# cat local-pv.ymlapiVersion: v1kind: PersistentVolumemetadata: name: local-pv namespace: kube-publicspec: capacity: storage: 30Gi accessModes: - ReadWriteOnce persistentVolumeReclaimPolicy: Recycle hostPath: path: /home/k8s完整的release chart命令如下:helm install --name mysql-dev --set mysqlRootPassword=hgfgood aliyun/mysql --tiller-namespace helm-system --namespace kube-public。

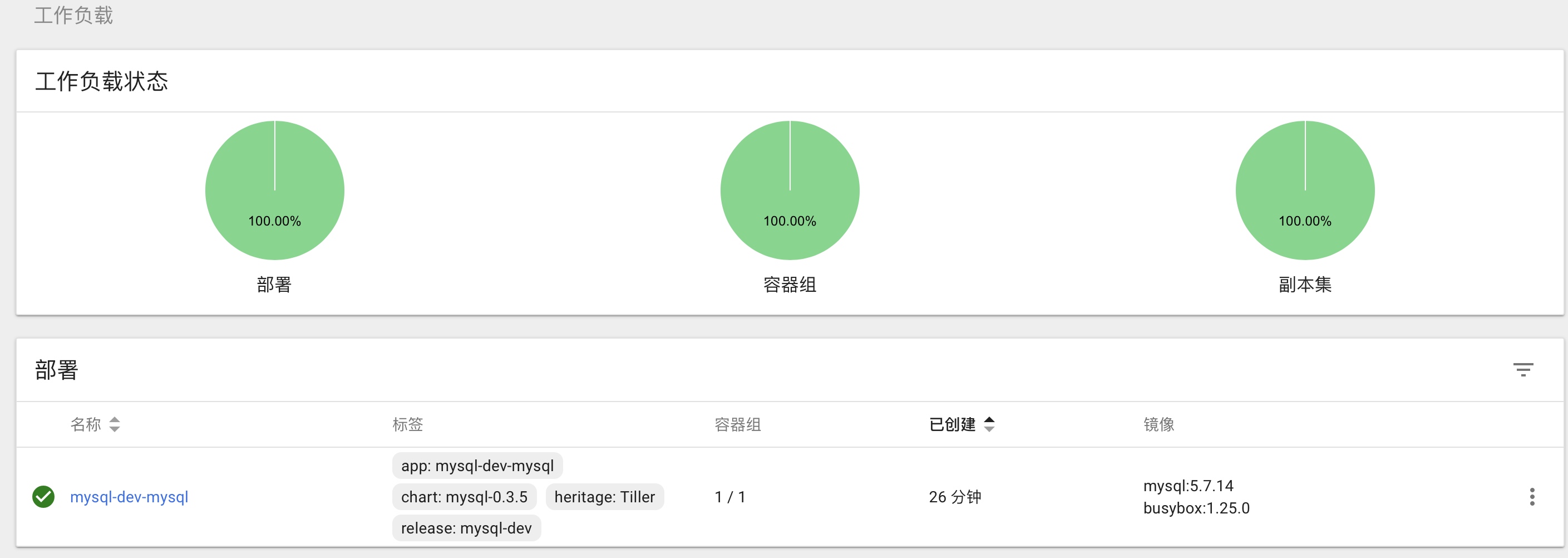

查看已经release的chart列表:

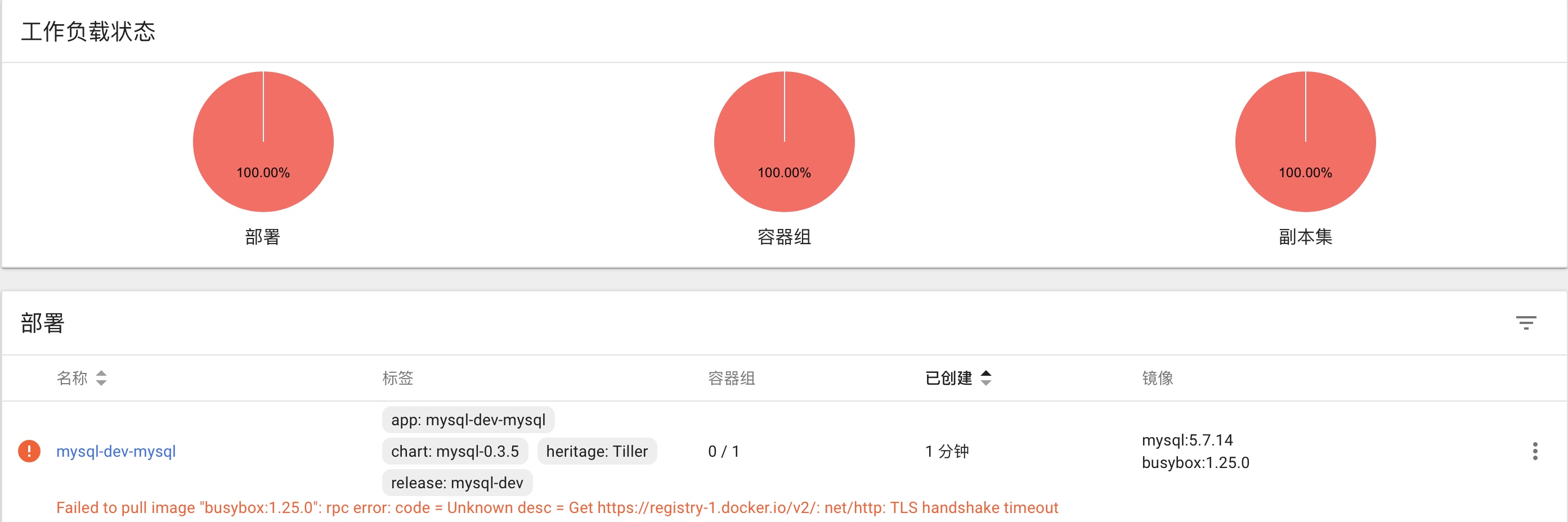

[root@kuber24 charts]# helm ls --tiller-namespace=helm-systemNAME REVISIONUPDATED STATUS CHART APP VERSIONNAMESPACEmysql-dev1 Fri Oct 26 10:35:55 2018DEPLOYEDmysql-0.3.5 kube-public正常情况下,dashboard监控的情况如下图:

运行此mysql chart 需要busybox镜像,偶尔会出现下图所示的问题,这是docker默认访问国外的docker hub导致的。需要先下载busybox镜像。

更新和回滚

上例中,安装完mysql,使用的root密码为hgfgood。本例中将其更新为hgf然后回滚到原始的密码hgfgood。

查询mysql安装后的密码:

[root@kuber24 charts]# kubectl get secret --namespace kube-public mysql-dev-mysql -o jsonpath="{.data.mysql-root-password}" | base64 --decode; echohgfgood更新mysql的root密码,helm upgrade --set mysqlRootPassword=hgf mysql-dev mysql --tiller-namespace helm-system

更新完成后再次查询mysql的root用户密码

[root@kuber24 charts]# kubectl get secret --namespace kube-public mysql-dev-mysql -o jsonpath="{.data.mysql-root-password}" | base64 --decode; echohgf查看RELEASE的信息:

[root@kuber24 charts]# helm ls --tiller-namespace helm-systemNAME REVISIONUPDATED STATUS CHART APP VERSIONNAMESPACEmysql-dev2 Fri Oct 26 11:26:48 2018DEPLOYEDmysql-0.3.5 kube-public查看REVISION,可以目前mysql-dev有两个版本。

回滚到版本1:

[root@kuber24 charts]# helm rollback mysql-dev 1 --tiller-namespace helm-systemRollback was a success! Happy Helming![root@kuber24 charts]# kubectl get secret --namespace kube-public mysql-dev-mysql -o jsonpath="{.data.mysql-root-password}" | base64 --decode; echohgfgood通过上述输出可以发现RELEASE已经回滚。

常见问题

Error: could not find tiller,使用helm client,需要与tiller 交互时,需要制定tiller的namespace,使用参数--tiller-namespace helm-system,此参数默认时kube-system。

境内chart下载失败的问题

由于网络问题下载会失败的问题,例如:

[root@kuber24 ~]# helm install stable/mysql --tiller-namespace helm-system --namespace kube-public --debug[debug] Created tunnel using local port: '32774'[debug] SERVER: "127.0.0.1:32774"[debug] Original chart version: ""Error: Get https://kubernetes-charts.storage.googleapis.com/mysql-0.10.2.tgz: read tcp 10.20.13.24:56594->216.58.221.240:443: read: connection reset by peer进入本地charts保存的目录

使用阿里云fetch对应的chart

例如 安装mysql。

helm fetch aliyun/mysql --untar[root@kuber24 charts]# lsmysql[root@kuber24 charts]# ls mysql/Chart.yaml README.md templates values.yaml然后再次运行helm install 安装mysql chart。

helm install mysql --tiller-namespace helm-system --namespace kube-public可以使用--debug参数,打开debug信息。

[root@kuber24 charts]# helm install mysql --tiller-namespace helm-system --namespace kube-public --debug[debug] Created tunnel using local port: '41905'[debug] SERVER: "127.0.0.1:41905"[debug] Original chart version: ""[debug] CHART PATH: /root/Downloads/charts/mysqlNAME: kissable-bunnyREVISION: 1RELEASED: Thu Oct 25 20:20:23 2018CHART: mysql-0.3.5USER-SUPPLIED VALUES:{}COMPUTED VALUES:configurationFiles: nullimage: mysqlimagePullPolicy: IfNotPresentimageTag: 5.7.14livenessProbe: failureThreshold: 3 initialDelaySeconds: 30 periodSeconds: 10 successThreshold: 1 timeoutSeconds: 5persistence: accessMode: ReadWriteOnce enabled: true size: 8GireadinessProbe: failureThreshold: 3 initialDelaySeconds: 5 periodSeconds: 10 successThreshold: 1 timeoutSeconds: 1resources: requests: cpu: 100m memory: 256Miservice: port: 3306 type: ClusterIPHOOKS:MANIFEST:---# Source: mysql/templates/secrets.yamlapiVersion: v1kind: Secretmetadata: name: kissable-bunny-mysql labels: app: kissable-bunny-mysql chart: "mysql-0.3.5" release: "kissable-bunny" heritage: "Tiller"type: Opaquedata: mysql-root-password: "TzU5U2tScHR0Sg==" mysql-password: "RGRXU3Ztb3hQNw=="---# Source: mysql/templates/pvc.yamlkind: PersistentVolumeClaimapiVersion: v1metadata: name: kissable-bunny-mysql labels: app: kissable-bunny-mysql chart: "mysql-0.3.5" release: "kissable-bunny" heritage: "Tiller"spec: accessModes: - "ReadWriteOnce" resources: requests: storage: "8Gi"---# Source: mysql/templates/svc.yamlapiVersion: v1kind: Servicemetadata: name: kissable-bunny-mysql labels: app: kissable-bunny-mysql chart: "mysql-0.3.5" release: "kissable-bunny" heritage: "Tiller"spec: type: ClusterIP ports: - name: mysql port: 3306 targetPort: mysql selector: app: kissable-bunny-mysql---# Source: mysql/templates/deployment.yamlapiVersion: extensions/v1beta1kind: Deploymentmetadata: name: kissable-bunny-mysql labels: app: kissable-bunny-mysql chart: "mysql-0.3.5" release: "kissable-bunny" heritage: "Tiller"spec: template: metadata: labels: app: kissable-bunny-mysql spec: initContainers: - name: "remove-lost-found" image: "busybox:1.25.0" imagePullPolicy: "IfNotPresent" command: ["rm", "-fr", "/var/lib/mysql/lost+found"] volumeMounts: - name: data mountPath: /var/lib/mysql containers: - name: kissable-bunny-mysql image: "mysql:5.7.14" imagePullPolicy: "IfNotPresent" resources: requests: cpu: 100m memory: 256Mi env: - name: MYSQL_ROOT_PASSWORD valueFrom: secretKeyRef: name: kissable-bunny-mysql key: mysql-root-password - name: MYSQL_PASSWORD valueFrom: secretKeyRef: name: kissable-bunny-mysql key: mysql-password - name: MYSQL_USER value: "" - name: MYSQL_DATABASE value: "" ports: - name: mysql containerPort: 3306 livenessProbe: exec: command: - sh - -c - "mysqladmin ping -u root -p${MYSQL_ROOT_PASSWORD}" initialDelaySeconds: 30 periodSeconds: 10 timeoutSeconds: 5 successThreshold: 1 failureThreshold: 3 readinessProbe: exec: command: - sh - -c - "mysqladmin ping -u root -p${MYSQL_ROOT_PASSWORD}" initialDelaySeconds: 5 periodSeconds: 10 timeoutSeconds: 1 successThreshold: 1 failureThreshold: 3 volumeMounts: - name: data mountPath: /var/lib/mysql volumes: - name: data persistentVolumeClaim: claimName: kissable-bunny-mysqlLAST DEPLOYED: Thu Oct 25 20:20:23 2018NAMESPACE: kube-publicSTATUS: DEPLOYEDRESOURCES:==> v1/Pod(related)NAME READY STATUS RESTARTS AGEkissable-bunny-mysql-c7df69d65-lmjzn 0/1 Pending 0 0s==> v1/SecretNAME AGEkissable-bunny-mysql 1s==> v1/PersistentVolumeClaimkissable-bunny-mysql 1s==> v1/Servicekissable-bunny-mysql 1s==> v1beta1/Deploymentkissable-bunny-mysql 1sNOTES:MySQL can be accessed via port 3306 on the following DNS name from within your cluster:kissable-bunny-mysql.kube-public.svc.cluster.localTo get your root password run: MYSQL_ROOT_PASSWORD=$(kubectl get secret --namespace kube-public kissable-bunny-mysql -o jsonpath="{.data.mysql-root-password}" | base64 --decode; echo)To connect to your database:1. Run an Ubuntu pod that you can use as a client: kubectl run -i --tty ubuntu --image=ubuntu:16.04 --restart=Never -- bash -il2. Install the mysql client: $ apt-get update && apt-get install mysql-client -y3. Connect using the mysql cli, then provide your password: $ mysql -h kissable-bunny-mysql -pTo connect to your database directly from outside the K8s cluster: MYSQL_HOST=127.0.0.1 MYSQL_PORT=3306 # Execute the following commands to route the connection: export POD_NAME=$(kubectl get pods --namespace kube-public -l "app=kissable-bunny-mysql" -o jsonpath="{.items[0].metadata.name}") kubectl port-forward $POD_NAME 3306:3306 mysql -h ${MYSQL_HOST} -P${MYSQL_PORT} -u root -p${MYSQL_ROOT_PASSWORD}打包Chart

[ ] 详细的打包实验。

# 创建一个新的 charthelm create hello-chart# validate charthelm lint# 打包 chart 到 tgzhelm package hello-chart到此,相信大家对“helm的部署和简单使用”有了更深的了解,不妨来实际操作一番吧!这里是编程网网站,更多相关内容可以进入相关频道进行查询,关注我们,继续学习!