文章目录

一. requires_grad 属性:查看是否记录梯度

x = torch.rand(3, 3) # 直接创建的tensor变量默认是没有梯度的x.requires_grad结果如下:

False二. requires_grad_ ()函数:调用函数设置记录梯度与否

函数:requires_grad_(requires_grad=True)

x = torch.tensor([1., 2., 3.])print('x_base: ', x.requires_grad) # 正常状态:Falsex.requires_grad_(False) #Falseprint('x.requires_grad_(False): ', x.requires_grad) #Falsex.requires_grad_(True) # 等价:requires_grad_() #Trueprint('x.requires_grad_(True): ', x.requires_grad) #True结果如下:

x_base: Falsex.requires_grad_(False): Falsex.requires_grad_(True): True三. requires_grad属性参数,创建tensor时设置是否记录梯度

x = torch.tensor([1., 2., 3.], requires_grad=True) #Trueprint('x_base: ', x.requires_grad) # 正常状态结果如下:

x_base: True四. 查看模型的权重名称和参数值

[name for name, param in model.named_parameters()] #查看模型的权重名称[param for name, param in model.named_parameters()] #查看模型的权重值五. 查看模型权重梯度值

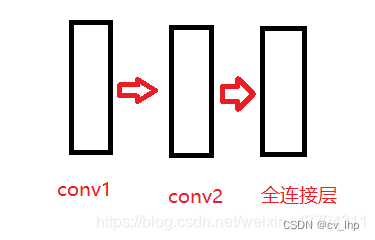

网络结构如下:

class Simple(nn.Module): def __init__(self): super().__init__() self.conv1 = nn.Conv2d(3, 16, 3, 1, padding=1, bias=False) self.conv2 = nn.Conv2d(16, 32, 3, 1, padding=1, bias=False) self.linear = nn.Linear(32*10*10, 20, bias=False) def forward(self, x): x = self.conv1(x) x = self.conv2(x) x = self.linear(x.view(x.size(0), -1)) return x model = Simple()查看模型权重梯度值:

print(model.conv1.weight.grad)来源地址:https://blog.csdn.net/flyingluohaipeng/article/details/129192987