小编给大家分享一下Android基于ArcSoft如何实现人脸识别,相信大部分人都还不怎么了解,因此分享这篇文章给大家参考一下,希望大家阅读完这篇文章后大有收获,下面让我们一起去了解一下吧!

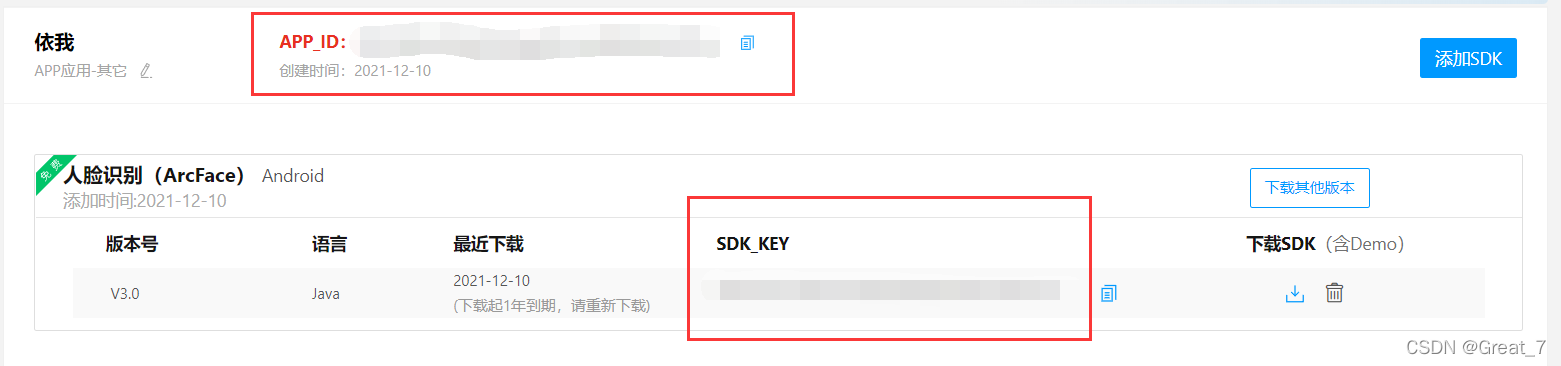

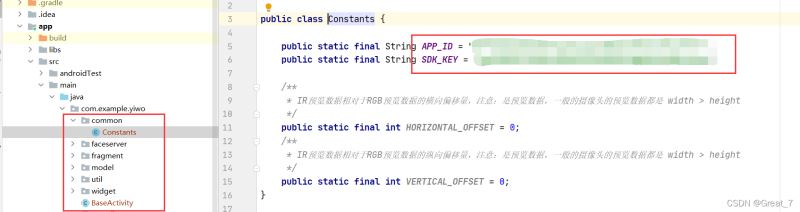

在虹软的开发者中心创建一个自己的应用,将APP_ID与SDK_KEY记录下来,后面会用到。创建完后就可以下载SDK了。

下载完后,就可以根据SDK包里的开发说明文档和代码进行参考和学习。以下是开发说明文档中的SDK包结构的截图。

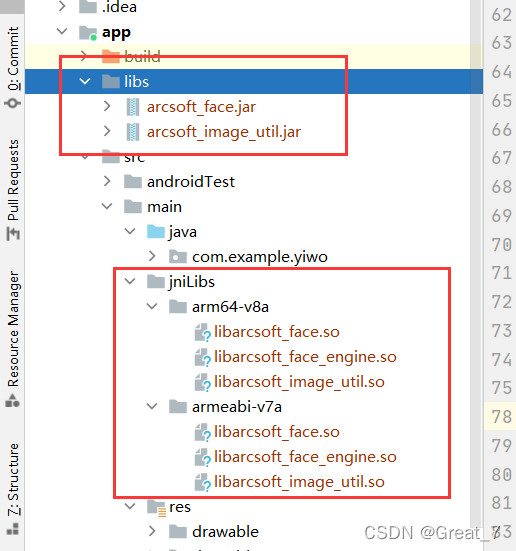

创建一个空项目,将SDK包里的.jar文件和.so文件复制到该项目的如下包下。接下来的配置十分重要,稍微没处理一个,就是一个头大的bug。

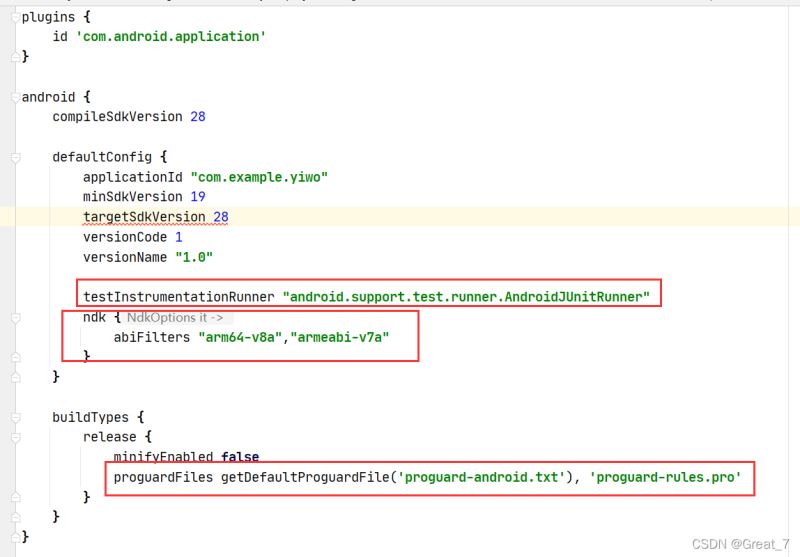

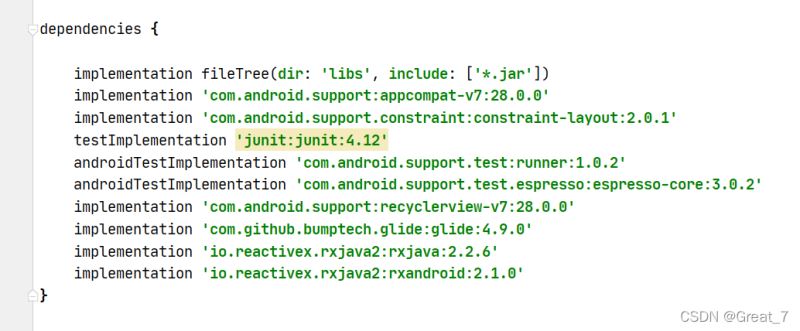

“在app里的build.gradle” 第一个红框原本是androidx的,与support是不兼容的,所以要改,因此,整个项目用到androidx的地方都需要改。第二个红框是ndk,加了这个才能找到刚才复制进去的.so文件。第三个红框也要改成如下。下面的dependencies要注意把androidx的改掉。

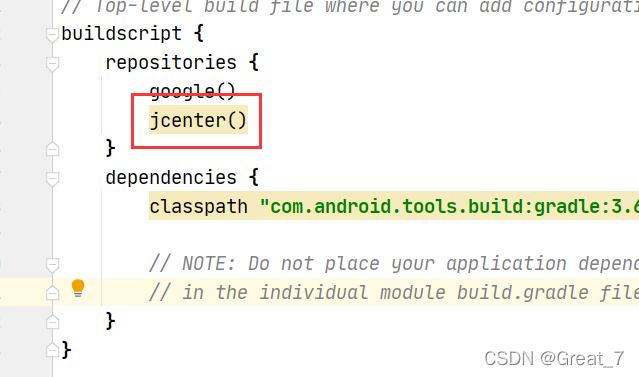

“在整个项目里的build.gradle” 记得加上jcenter()。

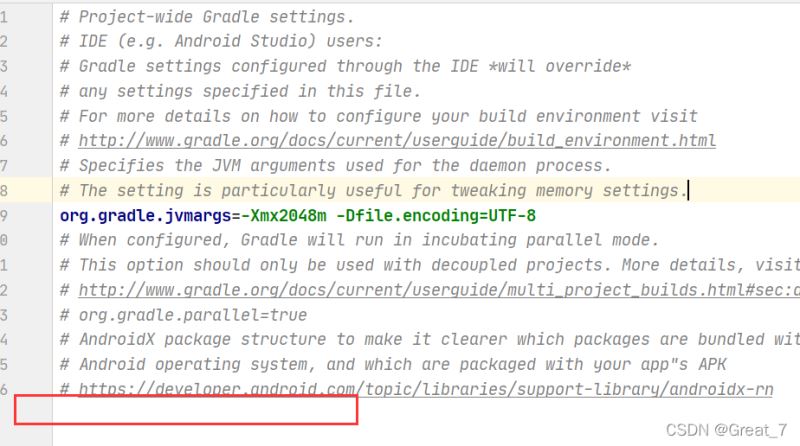

在gradle.properties里可能会有androidx的东西,也要删掉。

在AndroidManifest.xml中的中添加权限申请,在中添加。

manifest:

<uses-permission android:name="android.permission.CAMERA" /> <uses-permission android:name="android.permission.READ_PHONE_STATE" /> <uses-permission android:name="android.permission.INTERNET" /> <uses-permission android:name="android.permission.READ_EXTERNAL_STORAGE" /> <uses-permission android:name="android.permission.WRITE_EXTERNAL_STORAGE" />provider:

<provider android:name="android.support.v4.content.FileProvider" android:authorities="${applicationId}.provider" android:exported="false" android:grantUriPermissions="true"> <meta-data android:name="android.support.FILE_PROVIDER_PATHS" android:resource="@xml/provider_paths" /> </provider>在添加后要在res下创建一个xml包,里面添加一个provider_paths.xml文件,里面的代码如下:

<?xml version="1.0" encoding="utf-8"?><paths xmlns:android="http://schemas.android.com/apk/res/android"> <external-path name="external_files" path="."/> <root-path name="root_path" path="." /></paths>从SDK包中引入如下功能包模块和BaseActivity,并将common包下的Constants中的APP_ID,SDK_KEY改成刚才所记录下来的内容。

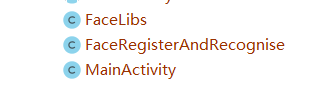

创建3个acvitity,一个是主界面,一个是人脸库的管理界面,一个是人脸识别功能界面。

layout包下需要引入以下5个布局文件。

主界面主要的功能就是激活权限、连接动态库和激活引擎,我通过修改onCreate()和util包下的ConfigUtil.class的代码,让其能够自动激活和自动修改为全方向人脸检查(其他选择好像不能够实现人脸识别)。以下是激活引擎的代码。

public void activeEngine(final View view) { if (!libraryExists) { Toast.makeText(this, "未找到库文件!", Toast.LENGTH_SHORT).show(); return; } if (!checkPermissions(NEEDED_PERMISSIONS)) { ActivityCompat.requestPermissions(this, NEEDED_PERMISSIONS, ACTION_REQUEST_PERMISSIONS); return; } if (view != null) { view.setClickable(false); } Observable.create(new ObservableOnSubscribe<Integer>() { @Override public void subscribe(ObservableEmitter<Integer> emitter) { int activeCode = FaceEngine.activeOnline(MainActivity.this, Constants.APP_ID, Constants.SDK_KEY); emitter.onNext(activeCode); } }) .subscribeOn(Schedulers.io()) .observeOn(AndroidSchedulers.mainThread()) .subscribe(new Observer<Integer>() { @Override public void onSubscribe(Disposable d) { } @Override public void onNext(Integer activeCode) { if (activeCode == ErrorInfo.MOK) { Toast.makeText(MainActivity.this, "激活成功!", Toast.LENGTH_SHORT).show(); } else if (activeCode == ErrorInfo.MERR_ASF_ALREADY_ACTIVATED){ Toast.makeText(MainActivity.this, "已激活!", Toast.LENGTH_SHORT).show(); } else { Toast.makeText(MainActivity.this, "激活失败!", Toast.LENGTH_SHORT).show(); } if (view != null) { view.setClickable(true); } ActiveFileInfo activeFileInfo = new ActiveFileInfo(); } @Override public void onError(Throwable e) { Toast.makeText(MainActivity.this, e.getMessage(), Toast.LENGTH_SHORT).show(); if (view != null) { view.setClickable(true); } } @Override public void onComplete() { } }); }人脸识别界面是最复杂的。其中不仅有人脸识别的功能,还有注册人脸和活体检测的功能。

通过手机自带的摄像头来实现人脸识别和活体检测的逻辑:

private void initCamera() { DisplayMetrics metrics = new DisplayMetrics(); getWindowManager().getDefaultDisplay().getMetrics(metrics); final FaceListener faceListener = new FaceListener() { @Override public void onFail(Exception e) { Log.e(TAG, "onFail: " + e.getMessage()); } //请求FR的回调 @Override public void onFaceFeatureInfoGet(@Nullable final FaceFeature faceFeature, final Integer requestId, final Integer errorCode) { //FR成功 if (faceFeature != null) {// Log.i(TAG, "onPreview: fr end = " + System.currentTimeMillis() + " trackId = " + requestId); Integer liveness = livenessMap.get(requestId); //不做活体检测的情况,直接搜索 if (!livenessDetect) { searchFace(faceFeature, requestId); } //活体检测通过,搜索特征 else if (liveness != null && liveness == LivenessInfo.ALIVE) { searchFace(faceFeature, requestId); } //活体检测未出结果,或者非活体,延迟执行该函数 else { if (requestFeatureStatusMap.containsKey(requestId)) { Observable.timer(WAIT_LIVENESS_INTERVAL, TimeUnit.MILLISECONDS) .subscribe(new Observer<Long>() { Disposable disposable; @Override public void onSubscribe(Disposable d) { disposable = d; getFeatureDelayedDisposables.add(disposable); } @Override public void onNext(Long aLong) { onFaceFeatureInfoGet(faceFeature, requestId, errorCode); } @Override public void onError(Throwable e) { } @Override public void onComplete() { getFeatureDelayedDisposables.remove(disposable); } }); } } } //特征提取失败 else { if (increaseAndGetValue(extractErrorRetryMap, requestId) > MAX_RETRY_TIME) { extractErrorRetryMap.put(requestId, 0); String msg; // 传入的FaceInfo在指定的图像上无法解析人脸,此处使用的是RGB人脸数据,一般是人脸模糊 if (errorCode != null && errorCode == ErrorInfo.MERR_FSDK_FACEFEATURE_LOW_CONFIDENCE_LEVEL) { msg = "人脸置信度低!"; } else { msg = "ExtractCode:" + errorCode; } faceHelper.setName(requestId, "未通过!"); // 在尝试最大次数后,特征提取仍然失败,则认为识别未通过 requestFeatureStatusMap.put(requestId, RequestFeatureStatus.FAILED); retryRecognizeDelayed(requestId); } else { requestFeatureStatusMap.put(requestId, RequestFeatureStatus.TO_RETRY); } } } @Override public void onFaceLivenessInfoGet(@Nullable LivenessInfo livenessInfo, final Integer requestId, Integer errorCode) { if (livenessInfo != null) { int liveness = livenessInfo.getLiveness(); livenessMap.put(requestId, liveness); // 非活体,重试 if (liveness == LivenessInfo.NOT_ALIVE) { faceHelper.setName(requestId, "未通过!非活体!"); // 延迟 FAIL_RETRY_INTERVAL 后,将该人脸状态置为UNKNOWN,帧回调处理时会重新进行活体检测 retryLivenessDetectDelayed(requestId); } } else { if (increaseAndGetValue(livenessErrorRetryMap, requestId) > MAX_RETRY_TIME) { livenessErrorRetryMap.put(requestId, 0); String msg; // 传入的FaceInfo在指定的图像上无法解析人脸,此处使用的是RGB人脸数据,一般是人脸模糊 if (errorCode != null && errorCode == ErrorInfo.MERR_FSDK_FACEFEATURE_LOW_CONFIDENCE_LEVEL) { msg = "人脸置信度低!"; } else { msg = "ProcessCode:" + errorCode; } faceHelper.setName(requestId, "未通过!"); retryLivenessDetectDelayed(requestId); } else { livenessMap.put(requestId, LivenessInfo.UNKNOWN); } } } }; CameraListener cameraListener = new CameraListener() { @Override public void onCameraOpened(Camera camera, int cameraId, int displayOrientation, boolean isMirror) { Camera.Size lastPreviewSize = previewSize; previewSize = camera.getParameters().getPreviewSize(); drawHelper = new DrawHelper(previewSize.width, previewSize.height, previewView.getWidth(), previewView.getHeight(), displayOrientation , cameraId, isMirror, false, false); Log.i(TAG, "onCameraOpened: " + drawHelper.toString()); // 切换相机的时候可能会导致预览尺寸发生变化 if (faceHelper == null || lastPreviewSize == null || lastPreviewSize.width != previewSize.width || lastPreviewSize.height != previewSize.height) { Integer trackedFaceCount = null; // 记录切换时的人脸序号 if (faceHelper != null) { trackedFaceCount = faceHelper.getTrackedFaceCount(); faceHelper.release(); } faceHelper = new FaceHelper.Builder() .ftEngine(ftEngine) .frEngine(frEngine) .flEngine(flEngine) .frQueueSize(MAX_DETECT_NUM) .flQueueSize(MAX_DETECT_NUM) .previewSize(previewSize) .faceListener(faceListener) .trackedFaceCount(trackedFaceCount == null ? ConfigUtil.getTrackedFaceCount(FaceRegisterAndRecognise.this.getApplicationContext()) : trackedFaceCount) .build(); } } @Override public void onPreview(final byte[] nv21, Camera camera) { if (faceRectView != null) { faceRectView.clearFaceInfo(); } List<FacePreviewInfo> facePreviewInfoList = faceHelper.onPreviewFrame(nv21); if (facePreviewInfoList != null && faceRectView != null && drawHelper != null) { drawPreviewInfo(facePreviewInfoList); } registerFace(nv21, facePreviewInfoList); clearLeftFace(facePreviewInfoList); if (facePreviewInfoList != null && facePreviewInfoList.size() > 0 && previewSize != null) { for (int i = 0; i < facePreviewInfoList.size(); i++) { Integer status = requestFeatureStatusMap.get(facePreviewInfoList.get(i).getTrackId()); if (livenessDetect && (status == null || status != RequestFeatureStatus.SUCCEED)) { Integer liveness = livenessMap.get(facePreviewInfoList.get(i).getTrackId()); if (liveness == null || (liveness != LivenessInfo.ALIVE && liveness != LivenessInfo.NOT_ALIVE && liveness != RequestLivenessStatus.ANALYZING)) { livenessMap.put(facePreviewInfoList.get(i).getTrackId(), RequestLivenessStatus.ANALYZING); faceHelper.requestFaceLiveness(nv21, facePreviewInfoList.get(i).getFaceInfo(), previewSize.width, previewSize.height, FaceEngine.CP_PAF_NV21, facePreviewInfoList.get(i).getTrackId(), LivenessType.RGB); } } if (status == null || status == RequestFeatureStatus.TO_RETRY) { requestFeatureStatusMap.put(facePreviewInfoList.get(i).getTrackId(), RequestFeatureStatus.SEARCHING); faceHelper.requestFaceFeature(nv21, facePreviewInfoList.get(i).getFaceInfo(), previewSize.width, previewSize.height, FaceEngine.CP_PAF_NV21, facePreviewInfoList.get(i).getTrackId());// Log.i(TAG, "onPreview: fr start = " + System.currentTimeMillis() + " trackId = " + facePreviewInfoList.get(i).getTrackedFaceCount()); } } } } @Override public void onCameraClosed() { Log.i(TAG, "onCameraClosed: "); } @Override public void onCameraError(Exception e) { Log.i(TAG, "onCameraError: " + e.getMessage()); } @Override public void onCameraConfigurationChanged(int cameraID, int displayOrientation) { if (drawHelper != null) { drawHelper.setCameraDisplayOrientation(displayOrientation); } Log.i(TAG, "onCameraConfigurationChanged: " + cameraID + " " + displayOrientation); } }; cameraHelper = new CameraHelper.Builder() .previewViewSize(new Point(previewView.getMeasuredWidth(), previewView.getMeasuredHeight())) .rotation(getWindowManager().getDefaultDisplay().getRotation()) .specificCameraId(rgbCameraID != null ? rgbCameraID : Camera.CameraInfo.CAMERA_FACING_FRONT) .isMirror(false) .previewOn(previewView) .cameraListener(cameraListener) .build(); cameraHelper.init(); cameraHelper.start(); }注册人脸的逻辑:

private void registerFace(final byte[] nv21, final List<FacePreviewInfo> facePreviewInfoList) { if (registerStatus == REGISTER_STATUS_READY && facePreviewInfoList != null && facePreviewInfoList.size() > 0) { registerStatus = REGISTER_STATUS_PROCESSING; Observable.create(new ObservableOnSubscribe<Boolean>() { @Override public void subscribe(ObservableEmitter<Boolean> emitter) { boolean success = FaceServer.getInstance().registerNv21(FaceRegisterAndRecognise.this, nv21.clone(), previewSize.width, previewSize.height, facePreviewInfoList.get(0).getFaceInfo(), "registered " + faceHelper.getTrackedFaceCount()); emitter.onNext(success); } }) .subscribeOn(Schedulers.computation()) .observeOn(AndroidSchedulers.mainThread()) .subscribe(new Observer<Boolean>() { @Override public void onSubscribe(Disposable d) { } @Override public void onNext(Boolean success) { String result = success ? "register success!" : "register failed!"; showToast(result); registerStatus = REGISTER_STATUS_DONE; } @Override public void onError(Throwable e) { e.printStackTrace(); showToast("register failed!"); registerStatus = REGISTER_STATUS_DONE; } @Override public void onComplete() { } }); } }人脸库的管理界面。

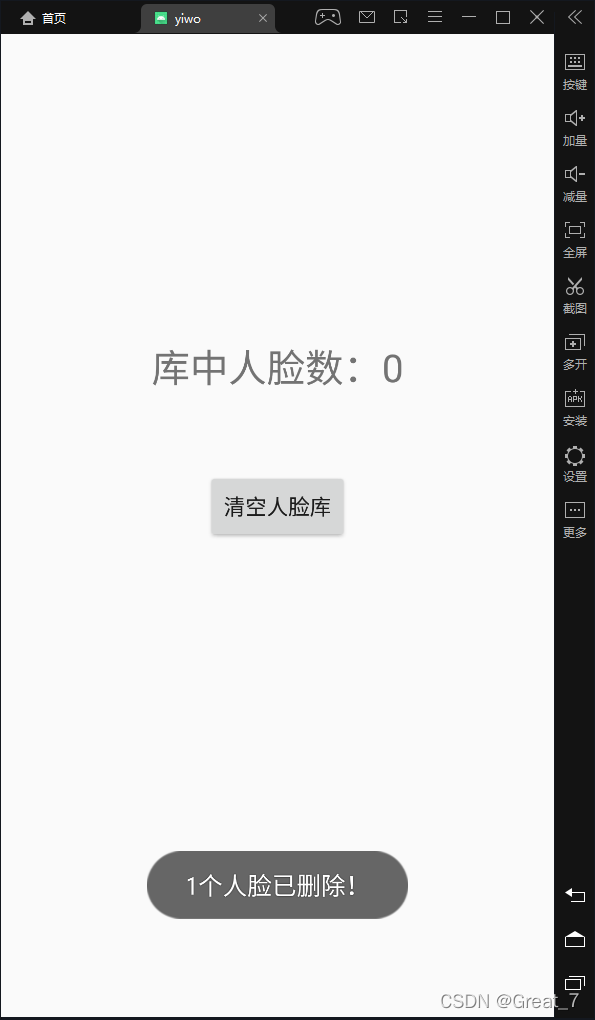

public class FaceLibs extends BaseActivity { private ExecutorService executorService; private TextView textView; private TextView tvNotificationRegisterResult; ProgressDialog progressDialog = null; private static final int ACTION_REQUEST_PERMISSIONS = 0x001; private static String[] NEEDED_PERMISSIONS = new String[]{ Manifest.permission.READ_EXTERNAL_STORAGE, Manifest.permission.WRITE_EXTERNAL_STORAGE }; @Override protected void onCreate(Bundle savedInstanceState) { super.onCreate(savedInstanceState); setContentView(R.layout.activity_face_libs); getWindow().addFlags(WindowManager.LayoutParams.FLAG_KEEP_SCREEN_ON); executorService = Executors.newSingleThreadExecutor(); tvNotificationRegisterResult = findViewById(R.id.notification_register_result); progressDialog = new ProgressDialog(this); int faceLibNum = FaceServer.getInstance().getFaceNumber(this); textView = findViewById(R.id.number); textView.setText(faceLibNum + ""); FaceServer.getInstance().init(this); } @Override protected void onDestroy() { if (executorService != null && !executorService.isShutdown()) { executorService.shutdownNow(); } if (progressDialog != null && progressDialog.isShowing()) { progressDialog.dismiss(); } FaceServer.getInstance().unInit(); super.onDestroy(); } @Override void afterRequestPermission(int requestCode, boolean isAllGranted) { } public void clearFaces(View view) { int faceNum = FaceServer.getInstance().getFaceNumber(this); if (faceNum == 0) { showToast("人脸库已空!"); } else { AlertDialog dialog = new AlertDialog.Builder(this) .setTitle("通知") .setMessage("确定要删除" + faceNum + "个人脸吗?") .setPositiveButton("确定", new DialogInterface.OnClickListener() { @Override public void onClick(DialogInterface dialog, int which) { int deleteCount = FaceServer.getInstance().clearAllFaces(FaceLibs.this); showToast(deleteCount + "个人脸已删除!"); textView.setText("0"); } }) .setNegativeButton("取消", null) .create(); dialog.show(); } }}以上就是大体的介绍,还有一些小的细枝末节需要同志们动手实操一下。下面就来看看实现的效果。

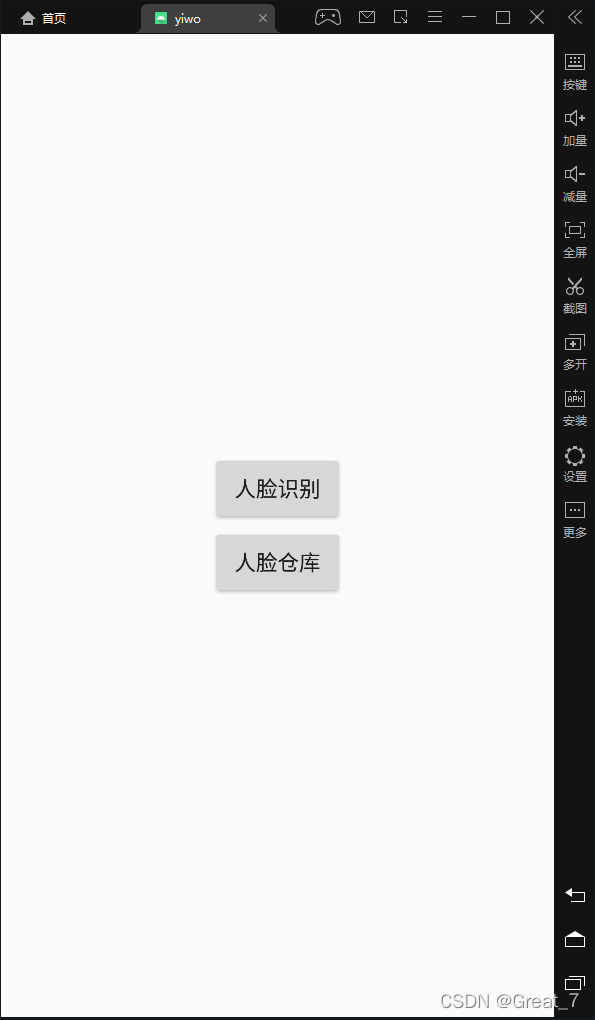

主界面:

注册成功并通过识别:

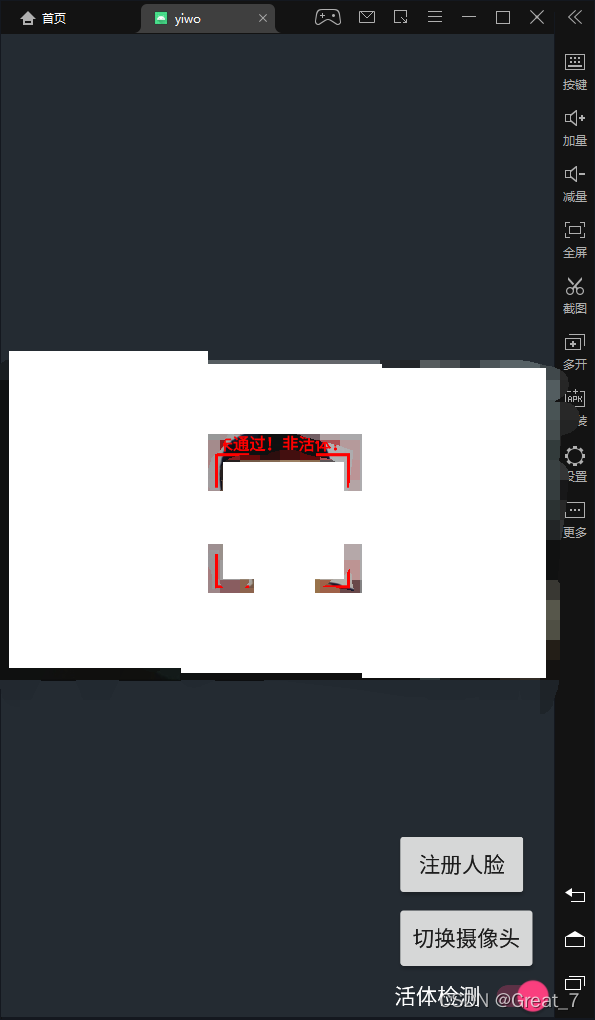

通过手机照片识别出不是活体:

清理人脸库:

以上是“Android基于ArcSoft如何实现人脸识别”这篇文章的所有内容,感谢各位的阅读!相信大家都有了一定的了解,希望分享的内容对大家有所帮助,如果还想学习更多知识,欢迎关注编程网行业资讯频道!